can try to increase Prometheus memory?

[url]Percona Monitoring and Management

Should I re run container with additional argument like

docker run -d -p 80:80 --volumes-from pmm-data --name pmm-server --restart always -e METRICS_MEMORY=4194304 percona/pmm-server:1.2.0

And how can I check that memory changes applied?

yes, please

run the following command

ps ax | grep prometheus

(4194304*1024)

It’s some kind of magic, but nothing changes. I setup new instance m4.large, set 4 gb for Prometheus.

~# ps aux | grep promet

ubuntu 17110 1.6 0.6 489068 56784 ? Sl 09:28 0:07 /usr/sbin/prometheus -config.file=/etc/prometheus.yml -storage.local.path=/opt/prometheus/data -web.listen-address=:9090 -storage.local.retention=720h --storage.local.target-heap-size=[B]4294967296[/B] -storage.local.chunk-encoding-version=2 -web.console.libraries=/usr/share/prometheus/console_libraries -web.console.templates=/usr/share/prometheus/consoles -web.external-url=http://localhost:9090/prometheus/

All previous commands shows the same results.

#docker exec -it pmm-server curl --insecure https://172.25.74.241:42002/metrics-hr | tail

mysql_global_status_threads_running 1

# HELP mysql_global_status_uptime Generic metric from SHOW GLOBAL STATUS.

# TYPE mysql_global_status_uptime untyped

mysql_global_status_uptime 1.6333843e+07

# HELP mysql_global_status_uptime_since_flush_status Generic metric from SHOW GLOBAL STATUS.

# TYPE mysql_global_status_uptime_since_flush_status untyped

mysql_global_status_uptime_since_flush_status 1.6333843e+07

# HELP mysql_up Whether the MySQL server is up.

# TYPE mysql_up gauge

mysql_up 1

~# docker exec -it pmm-server bash -c 'time curl --insecure https://172.25.74.241:42002/metrics-hr >/dev/null'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 49001 100 49001 0 0 206k 0 --:--:-- --:--:-- --:--:-- 207k

real 0m0.246s

user 0m0.054s

sys 0m0.067s

~# cat /usr/local/percona/pmm-client/pmm.yml

server_address: pmm.qa.com client_address: 172.25.74.241 bind_address: 172.25.74.241 client_name: DB-qa-master

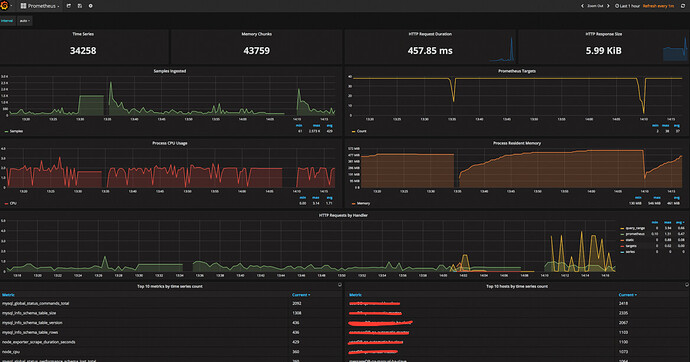

Maybe screenshots will help?

One more interesting thing. CPU usage unbelievable high for pmm-server.

top - 11:43:16 up 2:22, 1 user, load average: 1.87, 2.03, 2.09

Tasks: 148 total, 2 running, 146 sleeping, 0 stopped, 0 zombie

%Cpu(s): 98.7 us, 0.3 sy, 0.0 ni, 0.2 id, 0.2 wa, 0.0 hi, 0.7 si, 0.0 st

KiB Mem: 8175648 total, 3409272 used, 4766376 free, 177884 buffers

KiB Swap: 0 total, 0 used, 0 free. 2531164 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

29714 ubuntu 20 0 645656 277648 12844 S 196.4 3.4 3:29.37 prometheus

29729 root 20 0 207684 12232 4832 S 1.7 0.1 0:01.69 node_exporter

29704 998 20 0 1379428 75344 6912 S 1.0 0.9 0:00.92 mysqld

7 root 20 0 0 0 0 S 0.3 0.0 0:05.37 rcu_sched

29670 root 20 0 117344 14932 3920 S 0.3 0.2 0:00.36 supervisord

29705 ubuntu 20 0 231832 21688 10252 S 0.3 0.3 0:00.52 consul

29782 ubuntu 20 0 215512 11076 4712 S 0.3 0.1 0:00.31 orchestrator

1 root 20 0 33640 2940 1468 S 0.0 0.0 0:01.74 init

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

I notice that “–storage.local.target-heap-size=4294967296” has two dashes, whereas all other options (for example “-storage.local.chunk-encoding-version=2” and “-storage.local.retention=720h”) have one dash - maybe it did not apply the update as double dashes were used whereas Prometheus may require one dash? Please check.

Thanks, will check. Also I will try to use instance with 4 core

I have increased type of instance to m4.xlarge and set 4GB memory for Prometheus. After 3 hours working everything is looks good. FINALLY.

I am very surprised that prometheus use CPU so intensive. And memory almost all free. 1.07GB used from 15.67GB

Thanks Mykola, for your patience and help.

Also thanks to RoelVandePaar, 2 dashs it’s ok.

can you tune memory usage according to guide?

[url]Percona Monitoring and Management

I did it like in guide.

I used command

"docker run -d -p 80:80 --volumes-from pmm-data --name pmm-server --restart always -e METRICS_MEMORY=4194304 percona/1.2.0"

looks like more memory is not needed for prometheus right now

is CPU usage become normal?

do you have any issues right now?

how many database instances do you have?

Probably no but I don’t want change anything while it works fine )))

CPU usage is high permanently (50-70%). I check prod umm which still on 1.1.1 version. It works on m4.xlarge, support near 50 instances and CPU usage less then on 1.2.0 pmm, near 40-50%.

For now pmm works with 21 db instances.

Stateros

hm, strange, memory using should be bigger, cpu load lower for 21 instance

should be can you share output of the following command?

docker inspect pmm-server

Sure, here

~# docker inspect pmm-server

[

{

"Id": "8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a",

"Created": "2017-07-21T06:51:24.176130151Z",

"Path": "/opt/entrypoint.sh",

"Args": [],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 20511,

"ExitCode": 0,

"Error": "",

"StartedAt": "2017-07-21T12:51:37.667083601Z",

"FinishedAt": "2017-07-21T12:51:37.13950323Z"

},

"Image": "sha256:eb82a0e154c810e42bd8f1506696f88e10719b6c5c87ad0aa21d15096c6b6b74",

"ResolvConfPath": "/var/lib/docker/containers/8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a/hostname",

"HostsPath": "/var/lib/docker/containers/8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a/hosts",

"LogPath": "/var/lib/docker/containers/8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a/8151d099cf06795b1e2ffa0ed8f23b3b769e70e8d448d507c2f8962afa06b20a-json.log",

"Name": "/pmm-server",

"RestartCount": 0,

"Driver": "devicemapper",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {

"80/tcp": [

{

"HostIp": "",

"HostPort": "80"

}

]

},

"RestartPolicy": {

"Name": "always",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": [

"pmm-data"

],

"CapAdd": null,

"CapDrop": null,

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": null,

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DiskQuota": 0,

"KernelMemory": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": -1,

"OomKillDisable": false,

"PidsLimit": 0,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0

},

"GraphDriver": {

"Name": "devicemapper",

"Data": {

"DeviceId": "14",

"DeviceName": "docker-202:1-528005-536a58e06e4f7533f14030ad1287b38fb34a0370233cbb92b7904be292dd45f0",

"DeviceSize": "10737418240"

}

},

"Mounts": [

{

"Name": "127bf9b967abff17998dc64cf09de329e61e865dbfa7a265ae534a5a4ef74326",

"Source": "/var/lib/docker/volumes/127bf9b967abff17998dc64cf09de329e61e865dbfa7a265ae534a5a4ef74326/_data",

"Destination": "/var/lib/grafana",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

},

{

"Name": "9adde5bd070013481c4f70fa61836245c72d286c6389fd6fbc823c21b9fee662",

"Source": "/var/lib/docker/volumes/9adde5bd070013481c4f70fa61836245c72d286c6389fd6fbc823c21b9fee662/_data",

"Destination": "/var/lib/mysql",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

},

{

"Name": "a860793ea7339c7c592ddc03b8f5be114ea0f367f440722f431f7dccd39fc618",

"Source": "/var/lib/docker/volumes/a860793ea7339c7c592ddc03b8f5be114ea0f367f440722f431f7dccd39fc618/_data",

"Destination": "/opt/consul-data",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

},

{

"Name": "88cb8e99bc39144cb2a511afe466e22380e2c3fb96f54f6917ad01f58384f276",

"Source": "/var/lib/docker/volumes/88cb8e99bc39144cb2a511afe466e22380e2c3fb96f54f6917ad01f58384f276/_data",

"Destination": "/opt/prometheus/data",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

}

],

"Config": {

"Hostname": "8151d099cf06",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"443/tcp": {},

"80/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"METRICS_MEMORY=4194304",

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/opt/entrypoint.sh"

],

"Image": "percona/pmm-server:1.2.0",

"Volumes": null,

"WorkingDir": "/opt",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"build-date": "20161214",

"license": "GPLv2",

"name": "CentOS Base Image",

"vendor": "CentOS"

}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "5918ef1e9225c1286002f625168dacff341909b1931756ab2bedc7919a942402",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"443/tcp": null,

"80/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "80"

}

]

},

"SandboxKey": "/var/run/docker/netns/5918ef1e9225",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "73b31f9d4d274ac894119231b8f9d6124842efdc642fb2b24e263f33dc682a5f",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:02",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "db1d2b01f9f33f3a873f29a2f49bf0dd2bb5ccdbb6490e6180d087e8d0ba12ab",

"EndpointID": "73b31f9d4d274ac894119231b8f9d6124842efdc642fb2b24e263f33dc682a5f",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02"

}

}

}

}

]

If you want you can try to clean up prometheus database.

after this action, you will lose all data collected in Metrics Monitor (in grafana graphs) ![]()

I think it should fix high CPU load by prometheus.

# stop prometheus

docker exec -it pmm-server supervisorctl stop prometheus

# backup prometheus database

docker cp pmm-server:/opt/prometheus/data /tmp/prometheus-bak

# remove prometheus database

docker exec -it pmm-server bash -c 'rm -rf /opt/prometheus/data/*'

# start prometheus

docker exec -it pmm-server supervisorctl start prometheus