Hello everyone,

I’m looking for some guidance and best practices regarding the configuration of pgBackRest in a multi-data center Patroni PostgreSQL setup.

Current Setup:

-

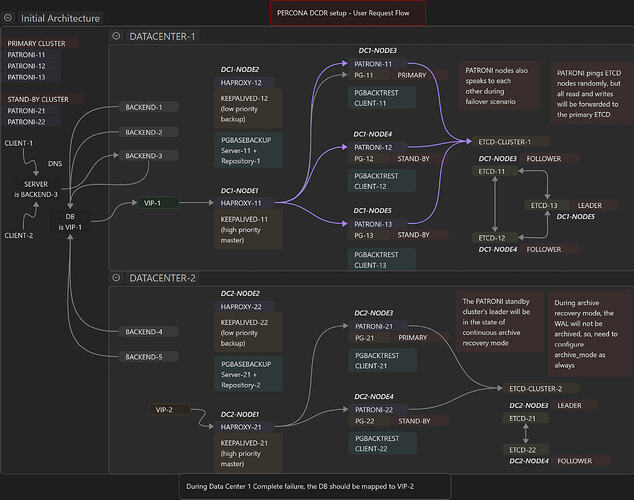

Two data centers (DC1 & DC2)

-

DC1: Hosts the primary Patroni cluster and a pgBackRest repository server (

repo1) -

DC2: Hosts a standby Patroni cluster (configured for synchronous replication)

-

-

In DC2, the standby cluster retrieves WAL archives from the pgBackRest server in DC1 using a recovery command.

Concern:

My concern is about high availability of archived WAL files. If the pgBackRest repository server in DC1 (repo1) becomes unavailable, the standby Patroni cluster in DC2 won’t be able to fetch WALs, which would interrupt replication.

Alternatively, configuring the standby to fetch WALs directly from the primary PostgreSQL nodes only works until those WALs are deleted (due to retention policies), which is also risky.

Question:

-

What is the recommended design for ensuring the standby Patroni cluster in DC2 always has access to the required WAL files, even if DC1’s pgBackRest repository server goes down?

-

Is it best practice to maintain a pgBackRest repository server in both DCs and set up synchronous/asynchronous mirroring between them?

-

Are there any other reliable approaches to ensure WAL availability and minimize data loss or downtime in this scenario?

Any architecture diagrams, example configurations, or experiences from similar environments would be greatly appreciated!

Thank you!